Making Decisions: How To Make Better Life Choices

Making Better Decisions

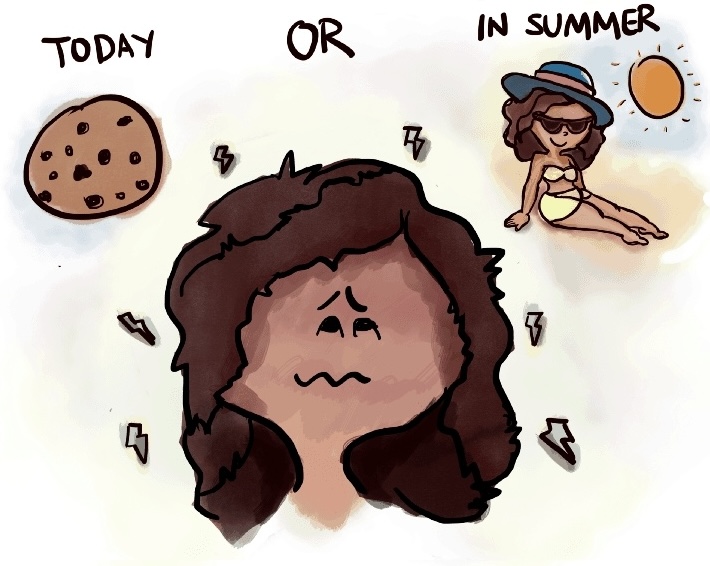

We have more decisions to make than ever before. Careers, friendships, health, and finances all demand making good choices. But experts agree that unconscious biases skew our perception. This distortion creates blind spots on our options. The better you understand these blind spots, the better you’ll be able to steer around these mental obstacles to make wiser life choices.

No one wants to look back on their life and wonder if they could have done better. Making decisions we can be proud of is what we want in order to build the best life possible. Here we’ll show you how to leverage effective decision making to make better life choices that meet your goals and maximize your strengths.On this page you’ll discover articles on breaking down the biases and behaviors standing in your way. Start making decisions that improve your health, better your relationships, and support the career you truly want. Find everything from simple hacks, new habits, and the latest findings to the most scientifically verified research. This section will cue you in to life’s secrets that most people are still missing out on.

Boost Your Productivity

Download our Hyperbolic Discounting Workbook.

Your email address is safe. I don't do the spam thing. Unsubscribe anytime. Privacy Policy.